FACE MASK DETECTOR

Weather is getting better, people are going out. I was one of them last week. I went to a restaurant where they ask customers to put their mask before the waiter come close to your table. Getting inspired from this situation I’ve created a system for the tables.

This is how it works, whenever a customer put their mask on the green lights up on their table, so it’s easy for waiters to see if you’re ready to order. Only thing you need to do is to put your mask on.

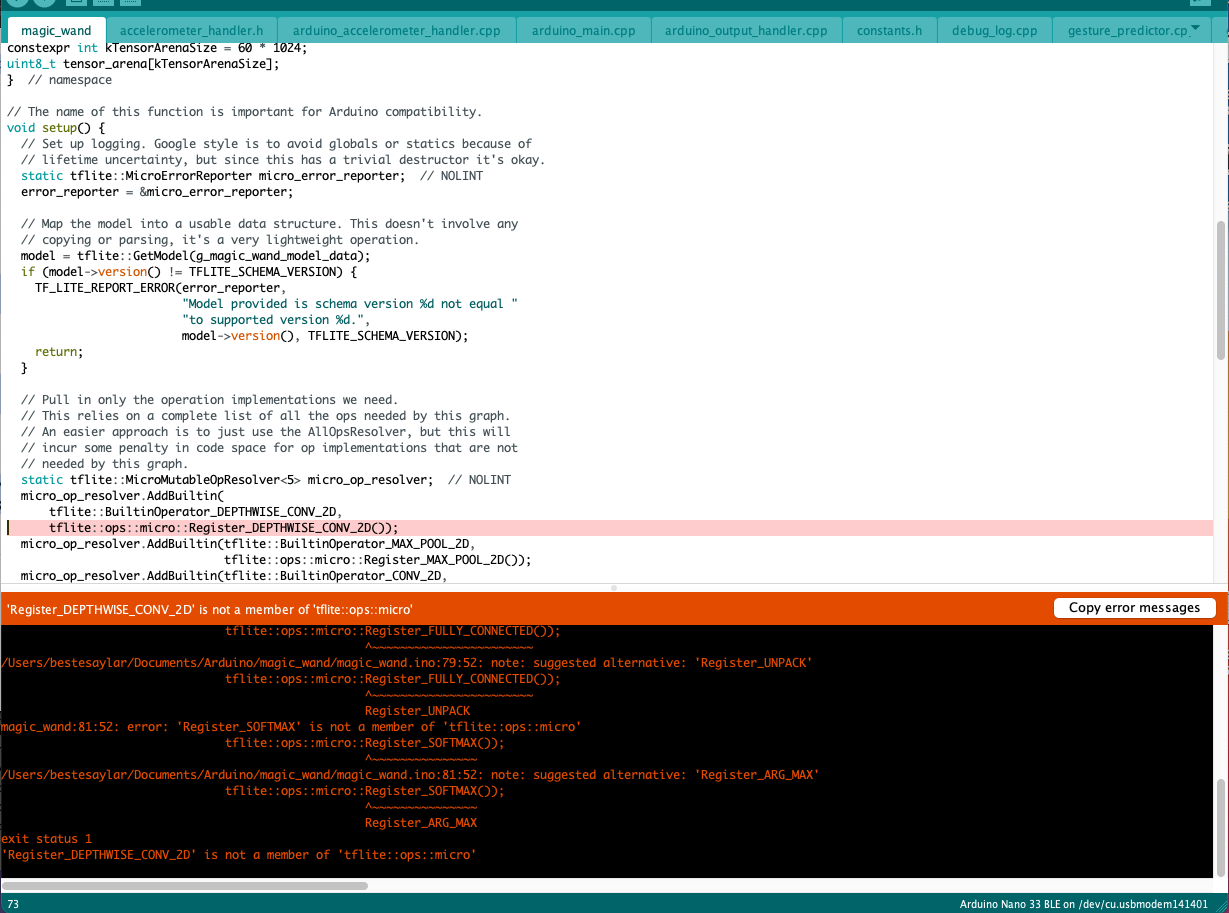

For this mask detection project, I’ve used ML5 image classifier to create 2 classes “with_mask“ and “without_mask“. Then, I’ve uploaded my model to my Arduino nano Sense BLE to light up LEDs. I’ve also designed an interface where, it is detected whether a person is putting mask or not. Depending on the circumstance, a label on the bottom of the video appears to alert user to put their masks on. I’ve used different emojis for each class.

DEMO

MASK ON - Ready to Order

MASK OFF - Still deciding